Full Disclosure: Patrick Smacchia (lead dev of NDepend) offered me an NDepend Professional license so that I could use it and blog about it. I gladly received it. Now I’m going to tell you how much it rocks, but not because I have to. I had been having interest in NDepend for quite some time. It’s a great tool in promoting good application code and architecture, something I’m quite fond of. This is a tool that I would have been using anyway if the project I’m working on were on .Net.

What is NDepend?

In case you haven’t been within the reach of geeky hype signal recently and never heard about NDepend, it is a tool that reads into your source code, and statically analyses if the code meets certain design guidelines and quality. By “statically”, it means that it examines your code without actually running it. NDepend is often used in code-review process during development to easily identify spots in the code that could be improved.

If that sounds like FxCop to you, yes they share the same goal in principle, but they are different in 2 ways:

- FxCop works on fine-grain details. It inspects each line of instruction or coding structure, and how they comply with framework design guideline. NDepend works more from high-level application architecture viewpoint. It visualizes the code in terms of dependencies, complexities, and other design metrics in quantifiable numbers, giving you a big picture idea of the state of the code and spot smelly areas. You can then define some thresholds to define an acceptable level of code quality. Further down this post, this difference will become crystal clear.

- FxCop gives you a set of rules you can use, but and there is no easy way to express your own rule. Adding new rule involves writing new add-in to be plugged into FxCop. The primary strength of NDepend is on its CQL (Code Query Language), a DSL that allows you to express your own custom rule and evaluate it on the fly as you type.

Using NDepend

NDepend makes me wish my current project at work was written on C#. It is in fact written in Java. Incidentally, developed using big-ball-of-mud approach, the code has degraded to one of the worst source-codes I have worked on. That should have been absolutely the perfect place to use NDepend and refactor the big mess out loud. Alas NDepend doesn’t deal with Java. The good news is, NDepend just announced to release NDepend for Java, XDepend. But that’s for another post.

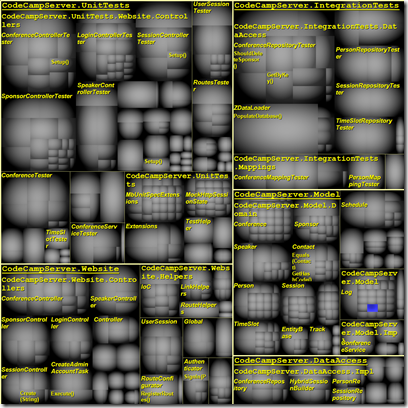

For our purpose, I pick a codebase from one of open-source .net projects I am quite familiar with, CodeCampServer, a web application developed using a Domain-Driven-Design and Test-Driven-Development on MVC platform. That perfectly mimics my idea of good application architecture.

UI appearance of Visual NDepend very much feels like Visual Studio environment.

Getting NDepend to analyse assemblies is incredibly easy! Literally couple of intuitive clicks, and before I know it, I am presented with fantastic details of information about the project.

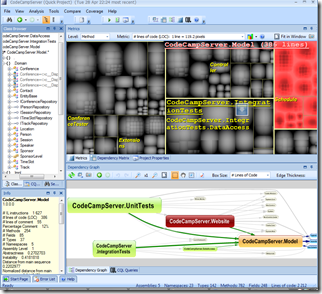

Dependency Graph

Dependency graph is the best way to understand the architecture of the code.

You can navigate further deeper to get more intimate understanding about the code. Since CodeCampServer is built using Domain-Driven-Design, the domain-model layer is more than likely to be our primary interest in understanding the application, particularly when it’s developed by other people.

What we are looking at is a direct x-ray scan of CodeCampServer.Model.Domain namespace, and it says 1 thing: the code is impressive! Doesn’t take much to notice that CodeCampServer is developed using a solid Domain-Driven-Design: the code almost looks like business document. None of all classes within this namespace has any reference to some geeky technical details or infrastructure components. Indeed, the dependency graph of this code directly reflects the business models and their associations, which is readily consumable not only for developers, but also for business people. Big A+ for CodeCampServer.

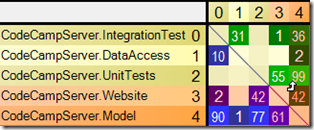

Dependency graph can also be presented in the form of matrix.

3 rules to read this matrix:

- Green: number of methods in namespace on the left header that directly use members of namespace on the top header

- Blue: number of methods in namespace on the left header that is directly used by members of namespace on the top header

- Black: number of circular direct dependency between namespaces on the left and on the top headers

As such, the following graph generates English description: 42 methods of the assembly CodeCampServer.Website are using 61 methods of the assembly CodeCampServer.Model

So back to the previous complete matrix, it shows that CodeCampServer.Model.Domain namespace does not have any reference to any other namespace (all horizontal boxes are blue). To put in other word, domain-model is the core of this application (see Onion Architecture). All DDD-ish so far.

What I find interesting is that data-access namespace (CodeCampServer.DataAccess) makes *zero* direct communication with CodeCampServer.Model.Domain. It means that none of the repositories makes a direct communication with domain entities at all. This is impressive! It might seem counter-intuitive at first, how can those repositories create instances of domain-entities from DB, as well as save the state of domain-entities to DB without making any communication with any method/property/constructor of domain entity?

CodeCampServer uses NHibernate as ORM which completely decouples data-access concerns from the application code. Repositories in data-access layer simply delegate all CRUD operations of domain entities to NHibernate that will handle the creation and persistence of domain-entities. The repository itself makes totally zero direct communication with domain-entity.

Overall, CodeCampServer has a very good dependency matrix, but you will be surprised of how many unexpected smells that you can find in your application. You might find many things are not written in the way how you architect it. If one of your developers take a shortcut and, say, call security-checking from domain-entity, you will notice an unexpected reference from the dependency matrix (Domain->Security). You can even setup automatic alert when that happens, e.g. when domain layer has any reference to other namespace. More on that later.

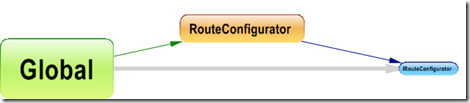

Circular Dependency

This is what we normally need to be alerted with. CodeCampServer.Website.Impl makes a circular reference with CodeCampServer.Website. A reference from CodeCampServer.Website into a member in more specific namespace like CodeCampServer.Website.Impl signals some kind of smell.

You can navigate through the detail of the reference by clicking on the box. Turns out that CodeCampServer.Website.Globals make a reference to CodeCampServer.Website.Impl.RouteConfigurator.

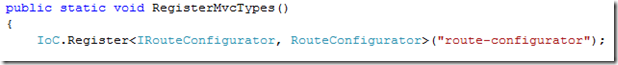

The reference is apparently caused by Windsor IoC configuration in Globals.RegisterMvcTypes() methods.

Since Global is only referencing RouteConfigurator by its class, and not communicating with it in any way, NDepend indicates 0 on the reference. These 2 components are entangled for a conscious intention,so this analysis concluded no design problem. However this is a good example of using NDepend feedback to discover potential design smell in many parts of applications. For example look at the following dependency matrix for Spring.Net code:

The black areas indicates that those namespaces are tightly entangled together. Tight coupling means that all those 6 namespaces can only be understood together, which adds complexity in understanding and maintaining the code. Of course tight-coupling also means that they all can only be used together. I.e. to use dependency-injection capability from Spring.Core, you will need the presence of Spring.Validation in the baggage.

Metric

NDepend presents overview information about our code in various metric. For example, here is “Line of Code” metric for CodeCampServer.

CodeCampServer is written with TDD, so unsurprisingly, Unit-Tests takes up majority of the code lines. Followed by integration-tests. The biggest portion of application-code is on Website layer. And here is my favorite part: just like a street map, you can zoom deeply into this map by scrolling your map, from top assembly level down to classes and individual methods! In fact, you can use your mouse scroll to zoom almost anything in NDepend user interface.

Now let’s see the map for Cyclomatic Complexity metric. The more if/else/switch statements in your code, the higher Cyclomatic Complexity it gets.

CodeCampServer is developed using Domain-Driven-Design approach, so when it comes to complexity and decision-making, Model layer takes up most of the space. Unit-test takes just about the same size.

Code Query Language

I can’t repeat this enough: CQL is brilliant! It gives you the power to gather just about any information you need about your source code. CQL is very similar to SQL, but instead of querying the database, you are querying into your source-code. You can use CQL to quickly search for particular areas in your code that can be of your interest in term of code quality.

The information that you can query with CQL is comprehensive. NDepends provides a huge 82 code metrics that provides access to large range of information you need.

- Which methods have been refactored since last release and is not thoroughly covered by unit-tests?

SELECT METHODS WHERE CodeWasChanged AND PercentageCoverage < 100 - What public methods could be declared as private?

SELECT METHODS WHERE IsPublic AND CouldBePrivate - What are the 10 most complex methods?

SELECT TOP 10 METHODS ORDER BY CyclomaticComplexity

Code Inspection

So far we have been talking about all various statistics and analysis, but we haven’t seen the part that matters the most in a static analysis tool: code inspection. You can set up custom coding guidelines using CQS, and let NDepend regularly inspect your codebase to meet the project standard, and issue warnings when somebody violates the rule.

Let’s say, Architectural Guidelines:

- DDD Value Object has to be immutable

WARN IF Count > 0 IN SELECT TYPES WHERE !IsImmutable AND HasAttribute “ValueObject” - Web layer should not have direct dependency to Data layer

WARN IF Count > 0 IN SELECT METHODS FROM NAMESPACES “Web” WHERE IsDirectlyUsing “Data” - Repository should not flush NHibernate session. It violates unit-of-work pattern.

WARN IF Count > 0 IN SELECT METHODS FROM NAMESPACES “Data” WHERE IsUsing “NHibernate.ISession.Flush()” - All public methods of Services has to be surrounded by database transaction

WARN IF Count > 0 IN SELECT METHODS FROM ASSEMBLIES “Services” WHERE IsPublic AND !HasAttribute “Transactional”

Quality Standards:

- Methods that are way too long, and obviously unreadable

WARN IF Count > 0 IN SELECT METHODS WHERE NbILInstructions > 200 ORDER BY NbILInstructions DESC - Methods that have too many parameters are smelly.

WARN IF Count > 0 IN SELECT METHODS WHERE NbParameters > 5 ORDER BY NbParameters DESC - Classes that have too many methods. Chances are they violate Single Responsibility Principle

WARN IF Count > 0 IN SELECT TYPES WHERE NbMethods > 20 ORDER BY LCOM DESC - Classes that overuse inheritance. You should consider favour_composition_over_inheritance principle

WARN IF Count > 0 IN SELECT TYPES WHERE DepthOfInheritance > 6 ORDER BY DepthOfInheritance DESC

Continuous Quality

Continuous quality is the ultimate goal of code-analysis tools. You can hook NDepend to your project’s continuous integration servers.

Hooking NDepend to TeamCity allows it to continuously monitor the quality of the project based on design rules you specifies, and ring the alarm when somebody checks-in some malicious code that violates design guidelines of the house. This gives an immediate feedback to the developers to fix the code before it degrades the codebase. Yes, we “Fix” code NOT only when something is broken, but also when the code does not meet certain quality threshold! It’s often difficult to get that across to some people.

This ensures not only that all codes being checked-in can actually be built into an actual application that satisfies all the requirements (unit-tests), but also ensures that the codebase continuously meet acceptable level of quality.

TeamCity is a really nice development dashboard that provides you a great detail of information about the current state of the application and its historical progressions, and NDepends supplies quantitative numbers for various quality metrics that you can track back. You can even use NDepend to analyse differences between 2 versions of source code and the implication to the metrics. You can observe how the metrics are evolving over time. In general, this will gives you a trend looking more or less like this:

At the start of the project, the code is looking good. Methods are short, classes are awesomely designed, loose coupling is maintained. Over time as the complexity grows, methods are getting stuffed up with if/else and non-sense code, classes are entangled together… The code starts to decay. When the code gets so bad and the heat goes beyond acceptable level or violates certain coding guidelines, NDepend starts complaining screaming for refactoring.

The developers starts cleaning up their mess and refactor the code, while the code is still reasonably manageable. The heat cools down a bit, and quality is getting better. As the development progressing, the heat keeps jumping up and each time NDepend gives you immediate feedback, allowing you to refactor the code before it gets too difficult. The overall code quality is constant over time.

Without continuous inspection to code quality, the diagram would have been:

The code decays steadily as it grows. It’s becoming less and less manageable with the intention to refactor it when you feel critical. But before you know it, the code is already too difficult to be refactored. Making a single change is totally painful, and people end up throwing more mud into it everyday until the code turns into a big ball of mud, that the only thing in your mind everyday is to scrap the code and move on to the next project. And I know that feeling all too well 😛

Report

When it comes to code analysis report, NDepend is top notch. There are ridiculously massive details of information about your code. From trivial lines-of-code, test-coverage, and comments, to dependencies and warnings. You can even compare 2 versions of the source-code. And all of them can be kept track in historical order within TeamCity. All these details can be overwhelming, luckily it’s presented with a great high level summary at the top, followed by increasingly detailed information as you walk down. Here are big-picture summary of CodeCampServer’s codebase:

Stable or abstract do not mean anything bad or good about the code. That is just a fact about the code.

- Stable package means that a lot of components are using it. I.e., it has a lot of reasons NOT to change

- Abstractness indicates the ratio between interfaces, abstract classes, or sealed classes within the package. Abstract package gives more flexibility in changing the code.

Good quality code is a balance between these 2 properties. It’s about finding equilibrium between 2 extreme characteristics:

- Painful: the package used by a lot of people, but lack of abstractness, hence painful to change

- Useless: the package is used by no one, but it has a lot of abstractness, hence over-engineering.

The website and data-access of CodeCampServer are infrastructure implementations, and it is structured that there is nothing in the application that depends on them. Thus they’re located at the edge of instability in the diagram. The core of the application, Model layer are by nature stable. They cannot be easily changed without affecting the other part of the application. Luckily it is highly decoupled (using service-interface and repository-interface), so it has higher abstraction, although not enough to make it into green area.

Summary

Weakness

There are however some area that NDepend still falls short.

- CQL is not as powerful as you might expect. The most significant one being the absence of any notion of subquery, or join, or any other mean that allows you to traverse relationship between components. What seem to be pretty simple queries are actually impossible to be expressed in current version of CQL:

- Select all methods in classes that implement IDisposable

- Any method that constructs any class from namespace “Services”. (Rule: services should be injected by IoC)

- Any member in namespace “Services” that has instance field of types from “Domain.Model” namespace. (Rule: DDD services should be stateless)

- All namespaces that refer to another namespace that make reference to opposite direction (i.e. circular reference)

- Continous Integration support is a bit weak. In fact, there is no direct support to TeamCity. Constraint violations can’t be fed into TeamCity alert system. And while TeamCity can store NDepend historical reports as artefacts, there is no easy way to follow the progression summary of the stats. E.g. growth of code size or dependencies (other than collecting all the reports ourselves), like what TeamCity does with code-coverage graphs. But maybe I’m just too demanding…

- 82 metrics are impressive, added with CQL it makes NDepend far superior than other code-analysis tools in term of the easiness of customisation. However, I haven’t found any mean to actually extend the metrics (or the constraints). Even though FxCop’s customisability is very limited, it’s fairly easy to write your own plugin and extend the tool yourself.

Having said that, NDepend is a really impressive tool. Lives for architects and application designers have never been easier. They no longer need to deal only with whiteboards and CASE tools. NDepend allows them to deal straight with the actual code realtime. Looking into the code from architectural viewpoint. NDepend provides great deal of flexibility with its brilliant CQL. NDepend is very intuitive, and it took me almost no time to get going and do interesting things.